TEXT

Talks

These are some selected presentations.

2023-05-23

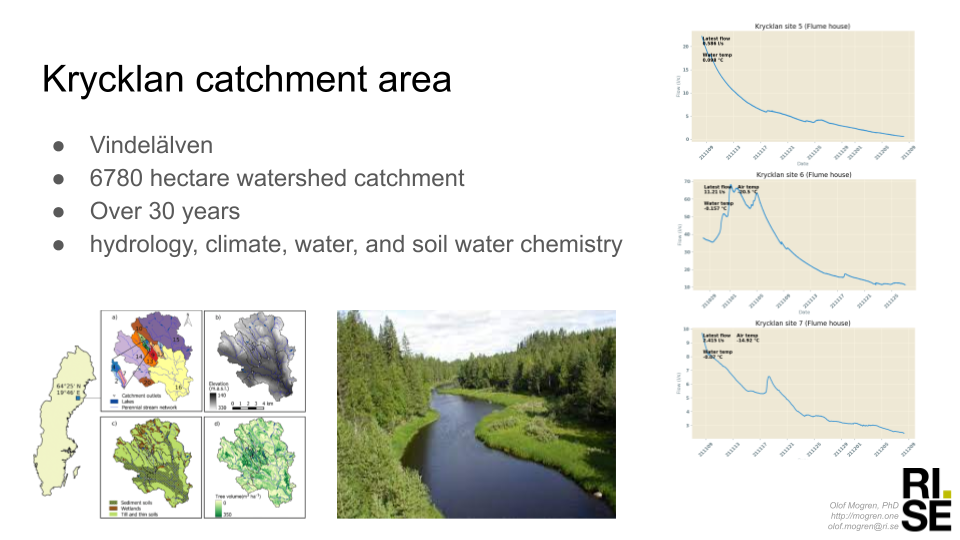

Tutorial at EUREF 2023: AI for the environment:

2021-09-15

AI for chemistry and process industry:

2021-01-20

Learned representations and what they encode: Learned continuous embeddings for language units was some of the first trembling steps of making neural networks useful for natural language processing (NLP), and promised a future with semantically rich representations for downstream solutions. NLP has now seen some of the progress that previously happened in image processing: the availability of increased computing power and the development of algorithms have allowed people to train larger models that perform better than ever. Such models also make it possible to use transfer learning for language tasks, thus leveraging large widely available datasets.

In 2016, Bolukbasi, et.al., presented their paper "Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings", shedding lights on some of the gender bias that was available in trained word embeddings at the time. Datasets obviously encode the social bias that surrounds us, and models trained on that data may expose the bias in their decisions. Similarly, learned representations may encode sensitive details about individuals in the datasets; allowing the disclosure of such information through distributed models or their outputs. All of these aspects are crucial in many application areas, not the least in the processing of medical texts.

Some solutions have been proposed to limit the expression of social bias in NLP systems. These include techniques such as data augmentation, representation calibration, and adversarial learning. Similar approaches may also be relevant for privacy and disentangled representations. In this talk, we'll discuss some of these issues, and go through some of the solutions that have been proposed recently to limit bias and to enhance privacy in various settings.

2020-11-27

Social bias and fairness in NLP: Learned continuous representations for language units was the first trembling steps of making neural networks useful for natural language processing (NLP), and promised a future with semantically rich representations for downstream solutions. NLP has now seen some of the progress that previously happened in image processing: the availability of increased computing power and the development of algorithms have allowed people to train larger models that perform better than ever. Such models also make it possible to use transfer learning for language tasks, thus leveraging large widely available datasets.

In 2016, Bolukbasi, et.al., presented their paper “Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings”, shedding lights on some of the gender bias that was available in trained word embeddings at the time. Datasets obviously encode the social bias that surrounds us, and models trained on that data may expose the bias in their decisions. It is important to be aware of what information a learned system is basing its predictions on. Some solutions have been proposed to limit the expression of societal bias in NLP systems. These include techniques such as data augmentation and representation calibration. Similar approaches may also be relevant for privacy and disentangled representations. In this talk, we’ll discuss some of these issues, and go through some of the solutions that have been proposed recently.

2020-11-05

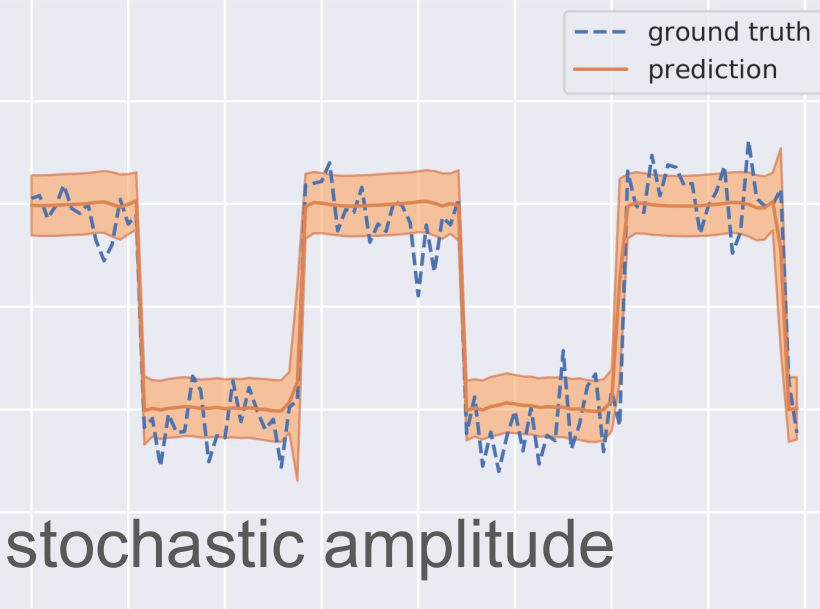

Uncertainty in deep learning: Our world is full of uncertainties: measurement errors, modeling errors, or uncertainty due to test-data being out-of-distribution are some examples. Machine learning systems are increasingly being used in crucial applications such as medical decision making and autonomous vehicle control: in these applications, mistakes due to uncertainties can be life threatening.

Deep learning have demonstrated astonishing results for many different tasks. But in general, predictions are deterministic and give only a point estimate as output. A trained model may seem confident in predictions where the uncertainty is high. To cope with uncertainties, and make decisions that are reasonable and safe under realistic circumstances, AI systems need to be developed with uncertainty strategies in mind. Machine learning approaches with uncertainty estimates can enable active learning: an acquisition function can be based on model uncertainty to guide in data collection and tagging. It can also be used to improve sample efficiency for reinforcement learning approaches.

In this talk, we will connect deep learning with Bayesian machine learning, and go through some example approaches to coping with, and leveraging, the uncertainty in data and in modelling, to produce better AI systems in real world scenarios.

Video: Youtube

2020-09-30

AI in the public sector: Invited talk for managers in Swedish public sector. Promises and possible pitfalls with upcoming advances in AI for the field. Recruitment, decision support, bias, privacy, and expectations.

2020-04-23

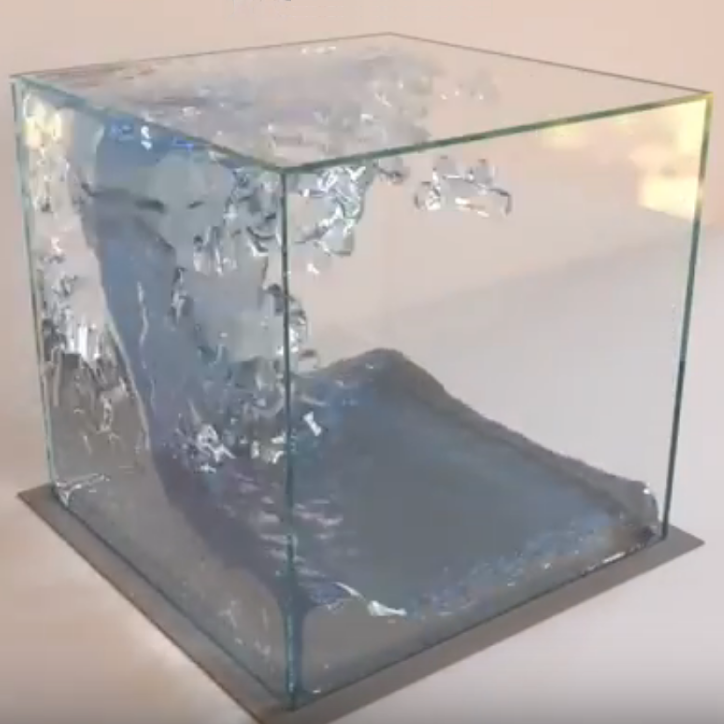

Machine learning for particle based simulations: In this talk, we went through some approaches where machine learning were utilized for particle-based simulations of physical systems. Emphasis were put on (Sanchez-Gonzalez, et.al., 2020), a solution that builds on graph neural networks. The approach is trained using data simulated from engineered simulators but shows results that are applicable to a number of different settings (specifically, three different kinds of substances are simulated in different environments). Relevant reading: Learning to Simulate Complex Physics with Graph Networks, Sanchez-Gonzalez, Godwin, Pfaff, Ying, Leskovec, Battaglia, https://arxiv.org/abs/2002.09405

2020-02-20

Social bias and fairness in NLP: Datasets encode the social bias that surrounds us, and models trained on that data may expose the bias in their decisions. In some situations it may be desirable to limit the dependencies on certain attributes in the data.

2019-12-12

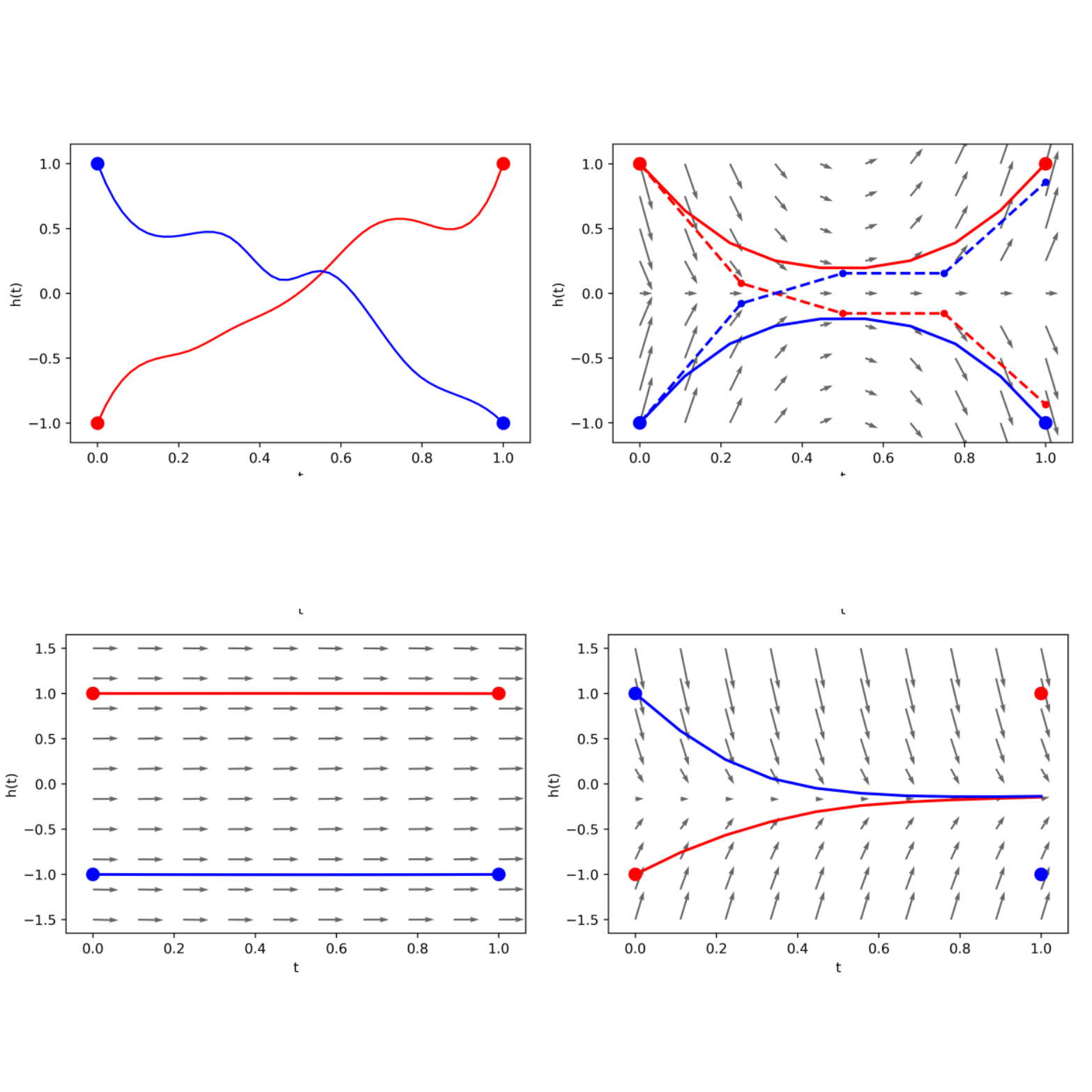

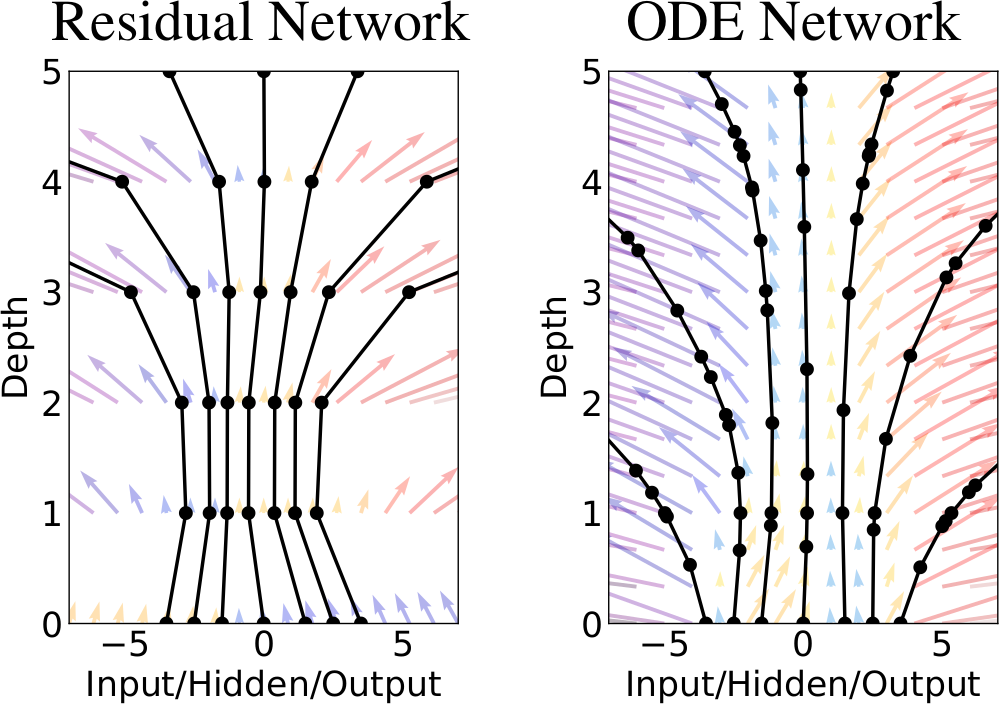

Augmented neural ODEs: We show that Neural Ordinary Differential Equations (ODEs) learn representations that preserve the topology of the input space and prove that this implies the existence of functions Neural ODEs cannot represent. To address these limitations, we introduce Augmented Neural ODEs which, in addition to being more expressive models, are empirically more stable, generalize better and have a lower computational cost than Neural ODEs.

2019-11-11

Privacy and limited data: GAIA Meetup seminar series talk about privacy and limited data.

2019-09-26

Convergence, generalisation and privacy in generative adversarial networks: Seminar presentation about Convergence, generalisation and privacy in generative adversarial networks.

2019-04-11

Unsupervised pretraining for image processing: Caron, et.al. presented "Deep clustering for unsupervised learning of visual features" at ECCV 2018. Due to the convolutional structure, a modern image classification model with random initialization of its parameters and no training, performs significantly better than random chance. The paper exploits this property and use the representations computed by such an untrained network to compute a clustering of the input images which is iteratively used to improve the network. In this talk, we will go through the idea of unsupervised pretraining for image processing in general, and then discuss the paper by Caron, et.al. in some more detail.

2019-01-31

Neural ordinary differential equations: Seminar presentation of “Neural ordinary differential equations” by Chen, et.al., receving best paper award at NeurIPS 2018.

2018-09-05

Swedish symposium on deep learning: September 5-6, 2018, RISE AI is presenting work at the Swedish symposium on deep learning. We have a poster about our work on blood glucose prediction with confidence estimation, and an oral presentation about character-based recurrent neural networks for morphological transformations. Come and talk to us!

2017-05-14

Can we trust AI: A talk at the science festival: During the science festival in Gothenburg, we had a session discussing artificial intelligence. The theme for the whole festival was “trust”, so we naturally named our session “Can we trust AI”. I gave an introduction, and shared my view of some of the recent progress that has been made in AI and machine learning, and then we had four other speakers giving their views of current state of the art. Finally, I chaired a discussion session that was much appreciated with the audience. The room was filled, and many people came up to us afterwards and kept the discussion going. The other speakers were Annika Larsson from Autoliv, Ola Gustavsson from Dagens Nyheter, and Hans Salomonsson from Data Intelligence Sweden AB.

2017-02-02

Takeaways from NIPS: meta-learning and one-shot learning: Before the representation learning revolution, hand-crafted features were a prerequisite for a successful application of most machine learning algorithms. Just like learned features have been massively successful in many applications, some recent work has shown that you can also automate the learning algorithms themselves. In this talk, I'll cover some of the related ideas presented at this year's NIPS conference.

2016-10-06

Deep Learning Guest Lecture:

A motivational talk about deep artificial neural networks, given to the students in FFR135 (Artificial neural networks). I gave motivations for using deep architechtures, and to learn hierarchical representations for data.

2016-09-29

Recent Advances in Neural Machine Translation:

Neural models for machine translation was introduced seriously in 2014. With the introduction of attention models their performance improved to levels comparable to those of statistical phrase-based machine translation, the type of translation we are all familiar with through servies like Google Translate.

However, the models have struggled with problems like limited vocabularies, the need of large amounts of data for training, and that they are expensive to train and use.

In the recent months, a number of papers have been published to remedy some of these issues. This includes techniques to battle the limited vocabulary problem, and of using monolingual data to improve the performance. As recently as Monday evening (Sept 26), Google uploaded a paper on their implementation of these ideas, where they claim performance on par with human translators, both counted in BLEU scores, and in human evaluations.

During this talk, I'll go through the ideas behind these recent papers.

2016-09-22

ACL overview: Overview talk about some important impressions from ACL 2016.

2016-08-11

Assisting Discussion Forum Users using Deep Recurrent Neural Networks: A presentation of our work on a virtual assistant for discussion forum users. The recurrent neural assistant was evaluated in a user evaluation in a realistic discussion forum setting within an IT consultant company. For more information, see publications.

2016-06-07

Modelling the World with Deep Learning: An introduction to Deep Artificial Neural Networks and their applications within image recognition, natural language processing, and reinforcement learning.

2016-02-25

Recognizing Entities and Assisting Discussion Forum Users using Neural Networks: Recurrent Neural Networks can model sequences of arbitrary lengths, and have been successfully applied to tasks such as language modelling, machine translation, sequence labelling, and sentiment analysis. In this this talk, I gave an overview of some ongoing research taking place in our group related to the technology. Firstly, a master thesis project in collaboration with the meetup host Findwise, concerning entity recognition in the medical domain in Swedish. Secondly, the effort to build a system to give useful feedback to users in a discussion forum.

2016-02-18

Neural Attention Models: In artificial neural networks, attention models allow the system to focus on certain parts of the input. This has shown to improve model accuracy in a number of applications. In image caption generation, attention models help to guide the model towards the parts of the image currently of interest. In neural machine translation, the attention mechanism gives the model an alignment of the words between the source sequence and the target sequence. In this talk, we'll go through the basic ideas and workings of attention models, both for recurrent networks and for convolutional networks. In conclusion, we will see some recent papers that applies attention mechanisms to solve different tasks in natural language processing and computer vision.

2016-01-13

Recurrent Networks and Sequence Labelling: An introduction to character-based recurrent neural networks andhow they can be used for sequence labeling.

2015-11-12

Machine Learning on GPUs using Torch7: An introduction to GPU computing from the machine learning perspective. I presented a survey of three different libraries: Theano, Torch, and Tensorflow. The first two libraries have backends both for CPUs and GPUs. TensorFlow has a more flexible backend, and also allows distributed computing on clusters. The talk also included a discussion about throughput and arithmetic intensity, inspired by Adam Coates lecture at the Deep Learning Summer School 2015.

2015-11-05

Deep Learning and Algorithms: A high-level overview of the field of algorithms, machine learning, and artificial intelligence. I talked about some recent advances in deep learning and gave an overview of the courses that the students can take at Chalmers.

2015-09-07

Extractive Summarization by Aggregating Multiple Similarities: Poster presentation covering results in extractive multi-document summarization. For more information, see publications.

2014-11-04

Automatic Multi-Document Summarization: An overview of techniques used for multi-document summarization, and a demonstration of the techniques developed in our group, based on kernel techniques and discrete optimization.

2014-05-08

Automatic Multi-Document Summarization: Live demo of summarization techniques developed in our group at "Vetenskapsfestivalen" ("Science Festival").