From Weak to Strong Sound Event Labels using Adaptive Change-Point Detection and Active Learning

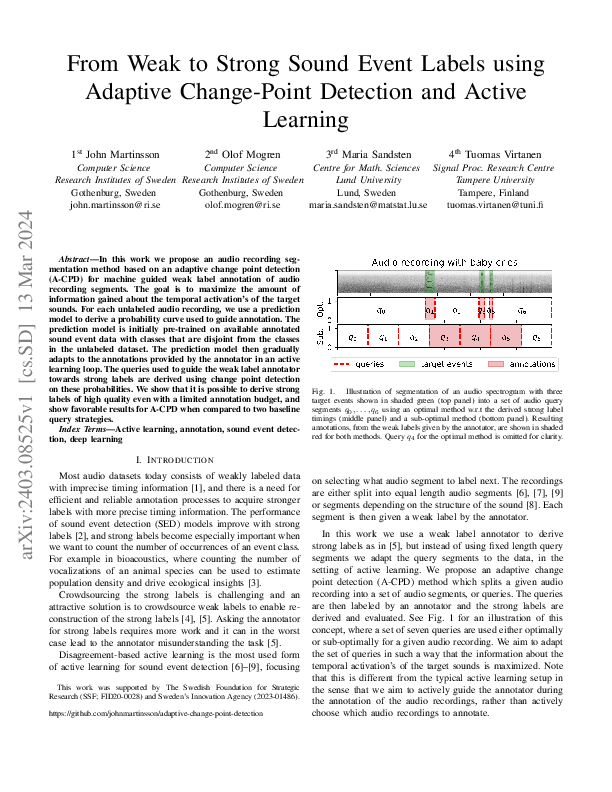

In this work we propose an audio recording segmentation method based on an adaptive change point detection (A-CPD) for machine guided weak label annotation of audio recording segments. The goal is to maximize the amount of information gained about the temporal activation's of the target sounds. For each unlabeled audio recording, we use a prediction model to derive a probability curve used to guide annotation. The prediction model is initially pre-trained on available annotated sound event data with classes that are disjoint from the classes in the unlabeled dataset. The prediction model then gradually adapts to the annotations provided by the annotator in an active learning loop. The queries used to guide the weak label annotator towards strong labels are derived using change point detection on these probabilities. We show that it is possible to derive strong labels of high quality even with a limited annotation budget, and show favorable results for A-CPD when compared to two baseline query strategies.

John Martinsson, Olof Mogren, Maria Sandsten, Tuomas Virtanen

European Signal Processing Conference

PDF Fulltext

DOI: https://doi.org/10.48550/arXiv.2403.08525

arxiv: 2403.08525

bibtex.