EMNLP 2017

2017-09-13

A few days ago the EMNLP conference (Empirical Methods in Natural Language Processing) took place in Copenhagen. With this post, I hope to share some of the high-level observations I made during the conference about trends in the area, or at least to gather my thoughts a bit after five days of intense soaking of information.

Subword-level modeling

Throughout EMNLP, subword-level models was a recurring topic. One of the most attended workshops was SCLeM (Subword and character-level methods), organized by Hinrich Schütze, Yadollah Yaghoobzadeh, Manaal Faruqui, and Isabel Trancoso, a workshop with an exciting line-up of invited speakers.

Subword-level models solve natural language tasks on the raw character-stream, without the need to tokenize the text (split it into word-tokens). Eliminating tokenization also eliminates a substantial source of errors and lets the model work with an open vocabulary (it can generalize to unseen words). A neural subword-level model can learn where the boundaries are for chunks of the text that carries meaning, and can get efficiency gains from not having to consider distributions over large vocabularies in the output layers. Subword models are de-facto standard in neural machine translation since late 2016, and the idea is spreading to many other tasks.

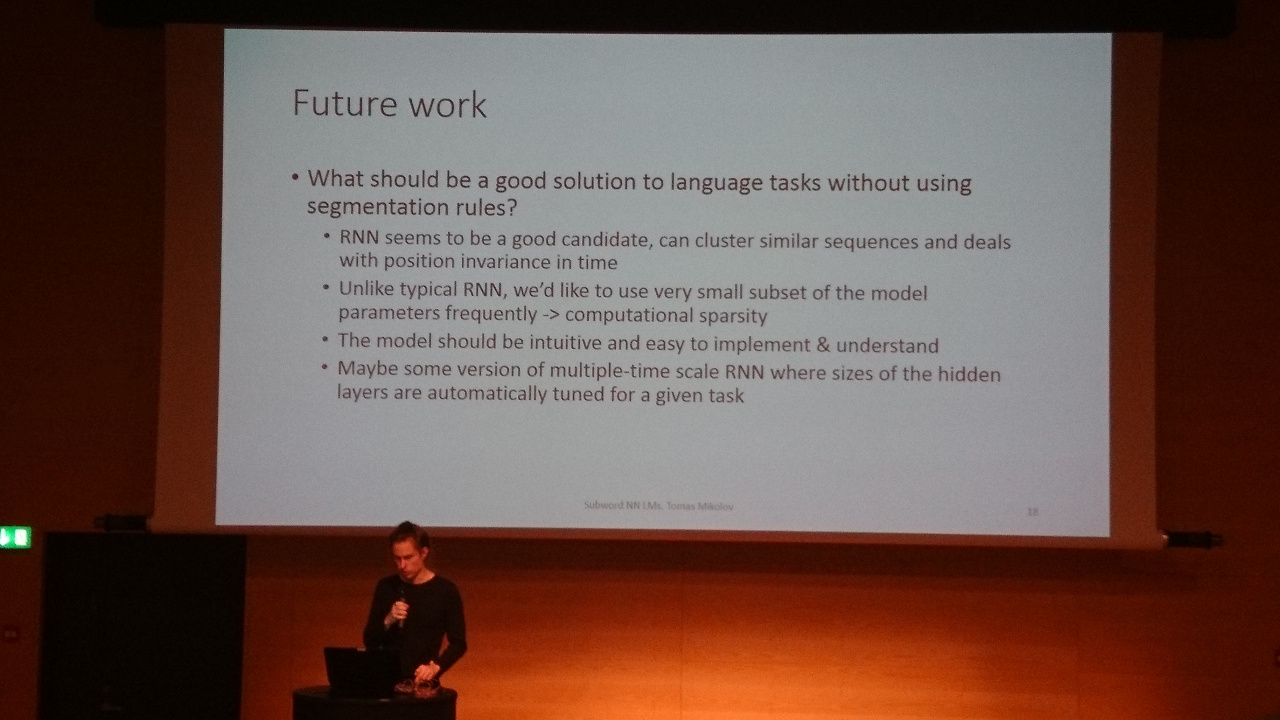

Thomáš Mikolov gave a thorough walk-through on the history of neural language modelling, NLM (Wikipedia) on subword-level. He explained that working on word-level is suboptimal when there is character variability, e.g. as in Czech. Mikolov called the word-based language models introduced in 2007 a “pseudo-solution”. In 2010, the RNN LMs were introduced, but the performance of these models working on characters was not satisfactory, or they required too much time to train. Still today, character-based RNN LMs perform slightly worse than their word-based counterparts, but they are not limited to the vocabulary seen at training time. Mikolov mentioned some of their recent papers on reducing computation in RNNs: Alternative structures for character-level RNNs, Piotr Bojanowski, Armand Joulin, Tomáš Mikolov, ICLR 2016, (PDF, openreview.net), and on training an RNN to learn when to update the weights: Variable Computation in Recurrent Neural Networks, Yacine Jernite, Edouard Grave, Armand Joulin, Tomas Mikolov, ICLR 2017 (PDF, arxiv.org).

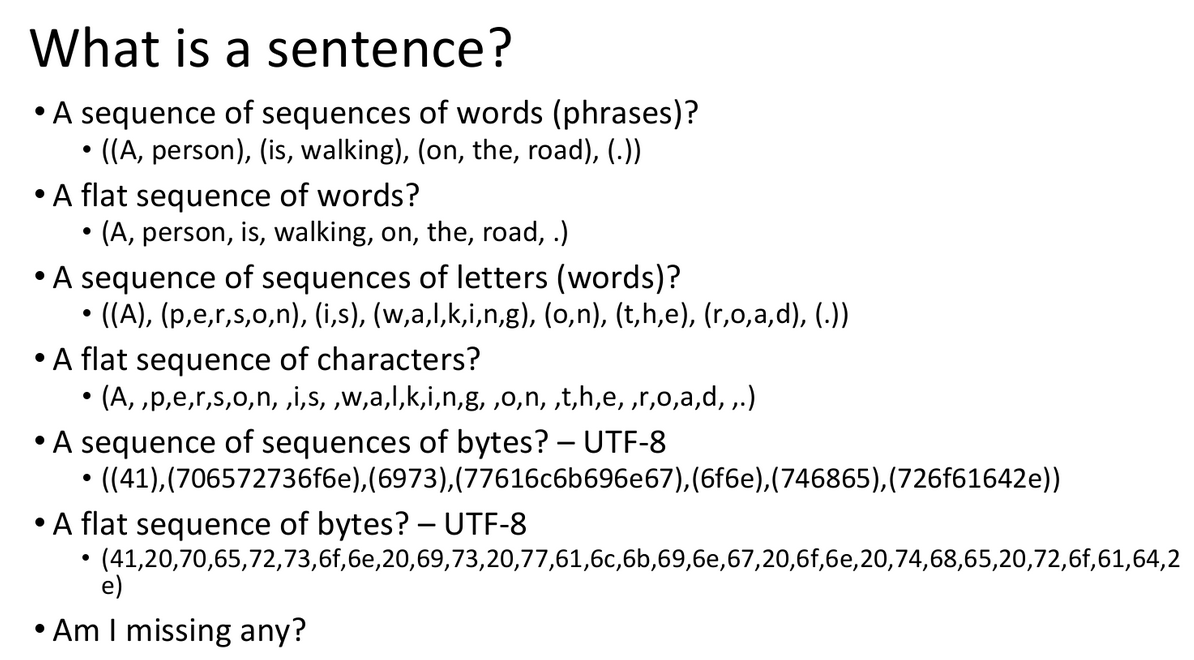

Kyunghyun Cho started his talk with the question “What is a sentence?” He explained that looking up “sentence” on Wikipedia does not give you an answer to this question. It gives you many pointers as to how a sentence should be, but not what it is. This lead into a discussion about how our machine learning systems should best read our language (see figure). In 2015, neural machine translation had improved to a point where to OOV tokens had become a limiting factor. Some solutions to this was Sennrich et.al., ACL 2016, (PDF, aclweb.org), using byte-pair-encoding, BPE, to compute a subword vocabulary, and Luong and Manning, ACL 2016, (PDF, aclweb.org), using a hybrid approach with a character-based RNN as fallback for OOV (out-of-vocabulary) terms. These approaches still required the preprocessing step of tokenization. Chung et al., ACL 2016, (PDF, aclweb.org) proposed a model with a decoder working only with characters, without any tokenization neccessary. The attention mechanism works perfectly, even when the encoder works on subwords, and the decoder works with raw characters. It consistently obtains results better than or equally good as the models using subword units on both sides.

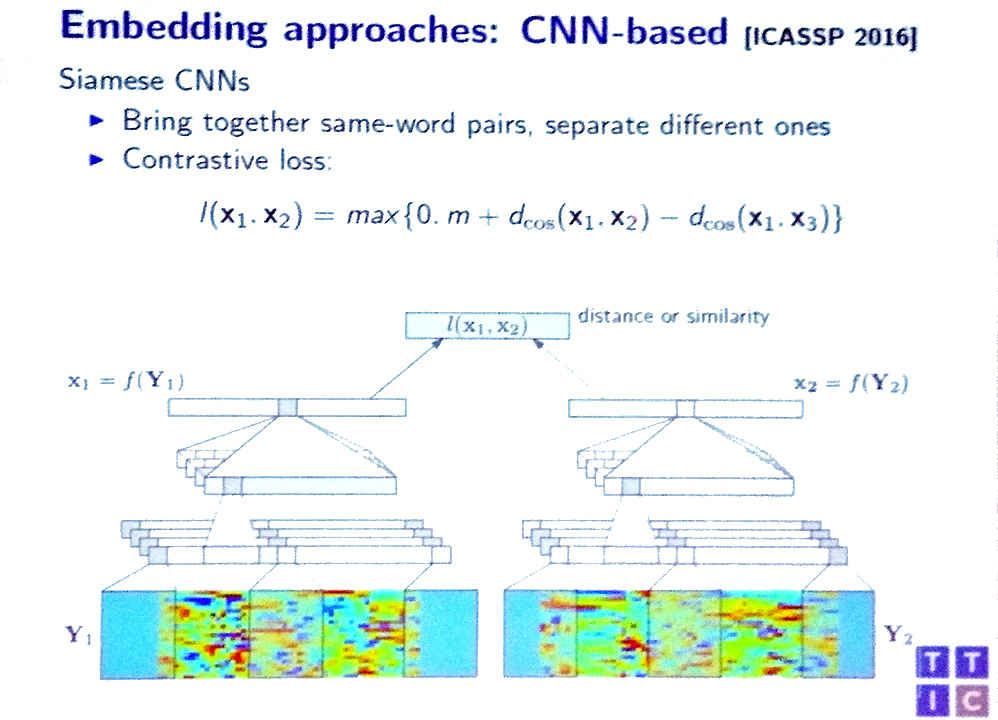

Karen Livescu described her work on embedding speech into vector representations using convnets, something that can be used for query-by-example, and can easily learn intra-speaker variations. These acoustic embeddings can be trained jointly with textual embeddings, by using a form of siamese training. She declared that the goal is to do NLP directly on the speech signal, but that there is much work left to do.

In the SCLeM poster sessions we presented our work on Character-based recurrent neural networks for morphological relational reasoning (mogren.one), where we train a character-based RNN model on how to represent morphological transformations of words, and can transfer these transformations between words. Our poster was in good company of 23 other posters with a range of related topics, from morphology to embeddings of parts of Chinese characters.

The subword-level trend is an exciting development as it does away with one more preprocessing step, and lets the model learn a larger part of the problem. Learning as much as possible generally makes for robust NLP solutions. In the workshop panel discussion, Sharon Goldwater declared that some bias is preferable in NLP models because it can help reduce the dependency on large datasets, a concern that can be motivated in some low-resource settings. The splitting of words however, is often arbitrary, and the rules about where a space should be in a character sequence differs between languages. Subword level models are certainly something we will see more of in the future.

Some related papers from the main conference:

- Joint Embeddings of Chinese Words, Characters, and Fine-grained Subcharacter Components, Jinxing Yu; Xun Jian; Hao Xin; Yangqiu Song, (PDF, aclweb.org)

- A Sub-Character Architecture for Korean Language Processing, Karl Stratos, (PDF, aclweb.org)

- Cross-lingual Character-Level Neural Morphological Tagging, Ryan Cotterell and Georg Heigold, (PDF, aclweb.org)

- Charmanteau: Character Embedding Models For Portmanteau Creation, Varun Gangal; Harsh Jhamtani; Graham Neubig; Eduard Hovy; Eric Nyberg, (PDF, aclweb.org)

- Mimicking Word Embeddings using Subword RNNs, Yuval Pinter, Robert Guthrie, Jacob Eisenstein, (PDF, aclweb.org)

Multilingual NLP

Besides machine translation being the topic of a large chunk of this year’s papers, multilingual work was definately a trend throughout the whole conference. (Machine translation and multilinguality made up about a third of all submitted papers this year).

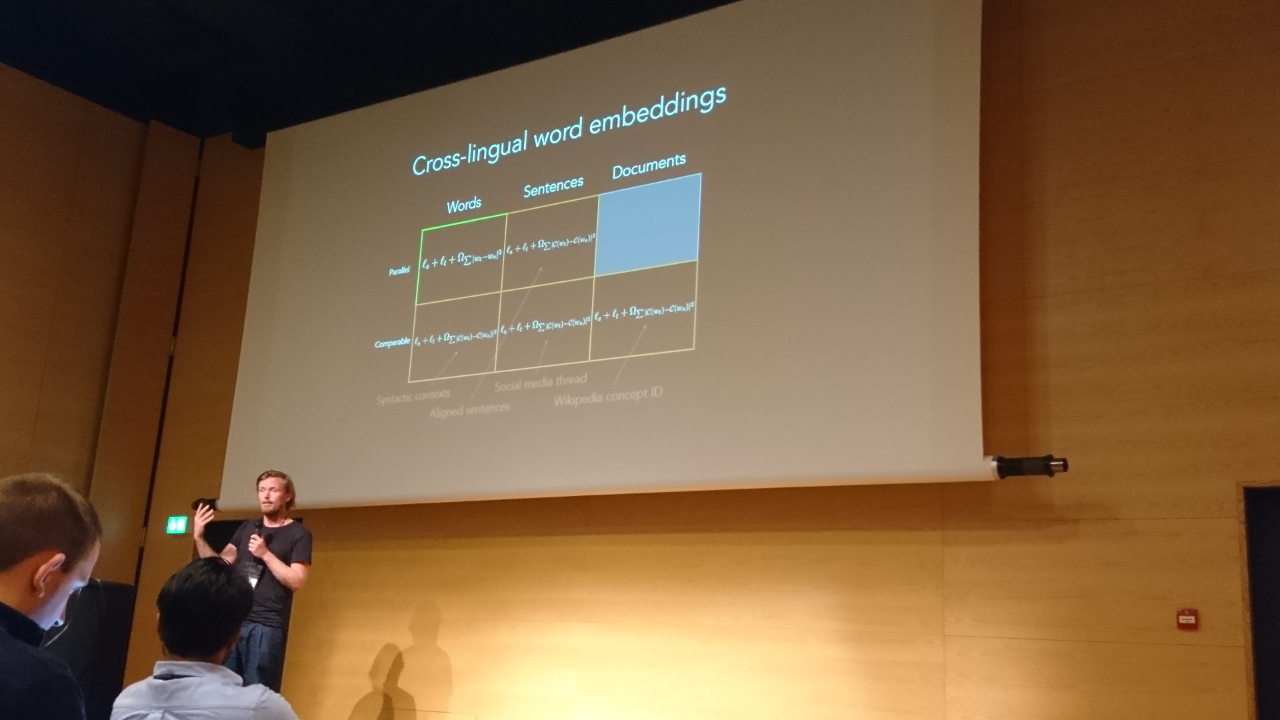

On Friday, Manaal Faruqui, Anders Søgaard, and Ivan Vulić gave a nice tutorial on multilingual embeddings, surveying the field of how to train word embeddings shareable across languages, with the obvious benefit for working with low-resource languages. Søgaard highlighted a useful resource being the Watchtower; a great resource, certainly with a lot of religious stuff, but with contemporary language, and in plenty of languages. “We put it all on github with the blessing of Jehova’s witnesses”.

An example of multilingual (and multi-modal) NLP systems was presented in Image Pivoting for Learning Multilingual Multimodal Representations, Spandana Gella; Rico Sennrich; Frank Keller; Mirella Lapata, (PDF, aclweb.org), where image descriptions in English and German is embedded into the same embedding space as the image itself, allowing for multi-lingual image search.

Cross-Lingual Transfer Learning for POS Tagging without Cross-Lingual Resources, Joo-Kyung Kim; Young-Bum Kim; Ruhi Sarikaya; Eric Fosler-Lussier, (PDF, aclweb.org) showed that using a combination of word-embeddings and character-embeddings, along with “language-adversarial” training (inspired by adversarial training; helps the internal representations to be language agnostic), can be used to transfer-learn a POS tagger with tagged data in one language, and apply it to another language (which is also tagged and used for training, but contains a limited number of examples).

Trainable Greedy Decoding for Neural Machine Translation, Jiatao Gu; Kyunghyun Cho; Victor O.K. Li, (PDF, aclweb.org) proposes a new approach for decoding in a trained NMT model (otherwise, most reseach focuses on the model design and how to train them to achieve good results; decoding is normally done using beam-search). The solution uses the REINFORCE policy gradient method to observe and manipulate the internal state of a trained NMT system, and for most tested language pairs, they show an improvement in BLEU scores.

Inspiration from infants learning

Nando de Freitas gave an exciting keynote speech on Saturday morning, in which he began by saying he didn’t know much about language (something that may indicate that machine learning is becoming such an intricate part of natural language processing that NLP conferences are keen on having prominent machine learning researchers as keynote speakers even if language is not their main field of interest). On his first slide, he showed a video of his daughter as a one-year-old pulling lego blocks apart, and being noteably excited when she finally figured out how to do so.

He continued to talk about how intelligence is a product of the environment, how the learning we do is performed in a context, because we are built with a lot of prior knowledge. The process of learning in a one-year-old child, is not a one-year long process. A child is itself a product of a process that took millions of years. An interesting question follows: how do you learn a system that has the capacity to continue learning?

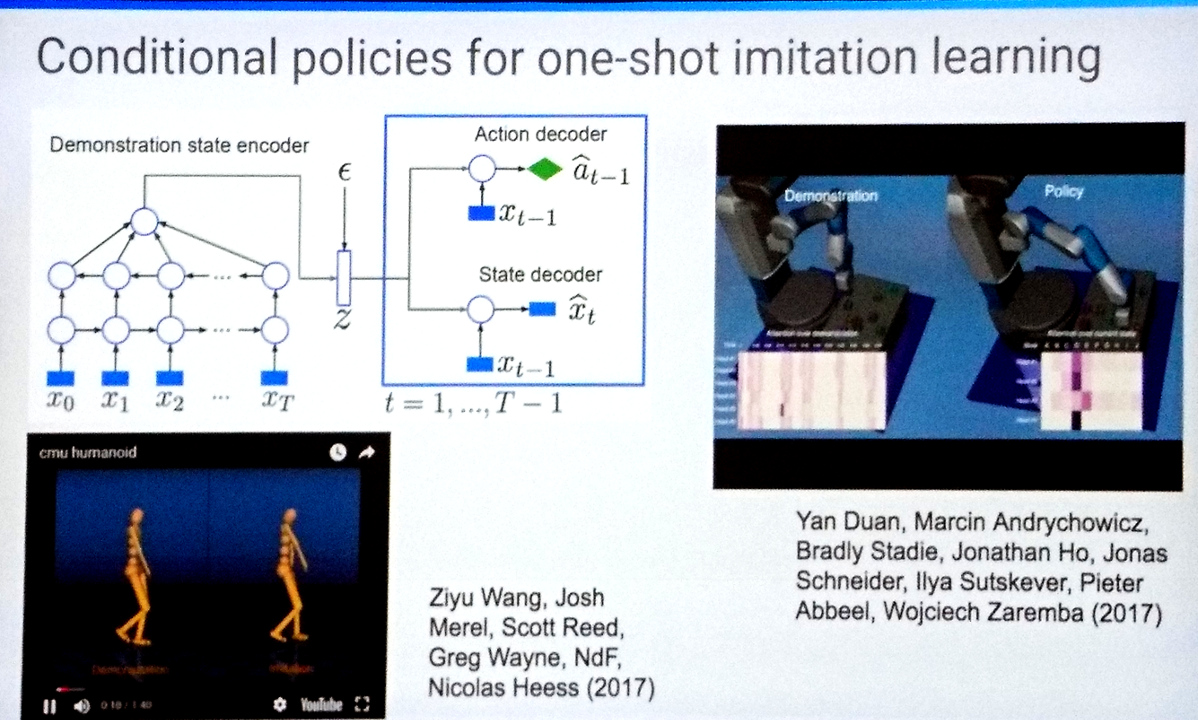

A number of papers has been published the last year about learning to learn, or meta-learning. Learning to learn by gradient descent by gradient descent, Marcin Andrychowicz, Misha Denil, Sergio Gómez, Matthew W. Hoffman, David Pfau, Tom Schaul, Nando de Freitas, (abstract, PDF, nips.cc) was published at NIPS 2016, a really nice paper outlining how to use LSTMs to learn the optimization strategy for an optimization problem. Learning to learn is closely related to zero-shot learning (or few-shot learning) and thus has great applications to natural language tasks, where one often has resources in a few languages but wants the systems to also work on languages with lesser resources.

The route to starting to understand intelligence is to use simulations, Freitas stated. Simulations lets you develop agents with grounded language, in experiments on how to command machines with natural language.

Sharon Goldwater gave her keynote talk about unsupervised speech recognition, using neural embeddings of speech signals, and clustering techniques. Studying unsupervised machine learning techniques allows us to study how language learning could be happening in infants, Goldwater continued. Children learn consonants and vowels simultaneously as they are learning larger language parts such as words and sentences.

Other exciting topics

Learning how to Active Learn: A Deep Reinforcement Learning Approach, Meng Fang; Yuan Li; Trevor Cohn, (PDF, aclweb.org) framed active learning as a decision process and approached it using Deep Q-learning. Previous work have typically used heuristics to select the training data in an active learning setup, something that may not generalize as well. The method is demonstrated on bilingual named entity recognition, where they transfer learning from one source language to a target language.

Repeat before Forgetting: Spaced Repetition for Efficient and Effective Training of Neural Networks, Hadi Amiri; Timothy Miller; Guergana Savova, (PDF, aclweb.org) train a system to choose how often each training example needs to be seen by a neural network during training. Interesting idea, and a nice presentation with motivations from the exponential decay of human memory.

Language grounding was something that Freitas talked a lot about, and there were also a few papers on the topic, this of course is closely connected to multi-modal learning which is covered above.

- Deriving continous grounded meaning representations from referentially structured multimodal contexts, Sina Zarrieß; David Schlangen, (PDF, aclweb.org)

- Sound-Word2Vec: Learning Word Representations Grounded in Sounds, Ashwin Vijayakumar; Ramakrishna Vedantam; Devi Parikh, (PDF, aclweb.org)

Racism. In his keynote presentation, Dan Juravsky talked about his work on analysing the language of police officers stopping vehicles in traffic. Using some simple features such as variations of politeness, friendliness, and respectfulness, they trained a simple supervised classifier, and found that the officers show more respect to white people than to black people. Also more respect towards older people. He concluded with a quote from Herbert H. Clark and Michael F. Schober 1992: “The common misconception is that language has to do with words and what they mean. It doesn’t. It has to do with people and what they mean”.

For those of you who weren’t able to attend, refer to the proceedings, and to the video recordings. To select a talk, you need to click on day and time and room. Refer to the program to know where to look.

Olof Mogren